Importance of Cost Optimization

Cost optimization is extremely important for organizations that use AWS. There are several reasons why cost optimization is important:

Control costs and stay within budget - AWS bills can grow quickly if costs are not monitored and optimized. By implementing cost optimization strategies, organizations can control their AWS spend and stay within their budgets.

Improve efficiency - Cost optimization efforts often reveal inefficiencies that can be improved. Optimizing resources and configurations can improve the efficiency of workloads running on AWS.

Avoid waste - Unused resources continue to incur costs on AWS. Cost optimization helps identify resources that are no longer needed so they can be terminated to avoid waste.

Prepare for growth - Optimizing costs now helps set a foundation for future growth. As workloads scale up, cost optimizations put in place earlier will continue to benefit the organization.

Better predictability - When costs are optimized, organizations have a better understanding of their AWS spend and can more accurately predict future costs. This improves financial planning and decision-making.

AWS offers many tools and services that help with cost optimization:

AWS Cost Explorer to track costs and usage over time

AWS Savings Plans for volume discounts

Spot Instances for up to 90% cost savings

AWS Trusted Advisor for cost optimization recommendations

Rightsizing recommendations to match resources with workload needs

Auto Scaling to dynamically scale resources up or down based on demand

Instance Scheduling to stop instances during non-operating hours

And many more...

In summary, cost optimization is an essential part of managing resources effectively on AWS. Implementing cost optimization strategies from the beginning and continuously optimizing costs will help organizations get the most value from the AWS Cloud.

So the main reason why organisations moving towards cloud providers is Cost Optimization but it can only happen if we use that cloud provider effectively and efficiently.

Now, for Cost Optimization we will create a Lambda function and inside we will write the script in Python (boto 3) which will further talk to AWS API

Introduction to Lambda Function

AWS Lambda is a computing service that lets you run your code in response to events without the need to provision or manage servers. It automatically scales your applications based on incoming requests, so you don't have to worry about capacity planning or dealing with server maintenance.

Some key points:

Lambda functions are event-driven. They are executed when triggered by events from other AWS services like S3, DynamoDB, API Gateway, etc. This allows you to build serverless applications.

You only pay for the compute time you consume. There are no servers to manage and you don't pay when your code is not running. This lowers the operational costs.

Lambda automatically scales. It can handle thousands of requests per second by provisioning more computing resources. You don't have to worry about scaling.

Lambda handles all the infrastructure management for you. This includes provisioning compute resources, monitoring functions and logging output.

Lambda integrates well with other AWS services. You can easily connect Lambda functions to services like API Gateway, S3, DynamoDB, etc. This allows you to build full serverless applications.

Lambda functions are short-lived. They are meant to execute individual tasks and then terminate. This makes serverless architectures inherently distributed and scalable.

Particularly in this project, we will write a Python script for the EBS snapshot and it will give us all the information of the EBS snapshot if there are scale snapshots or used snapshots if a volume is not connected to the ec2 instance or if that ec2 instance is deleted lambda function will immediately delete that volume or volume snapshot. so basically it will filter out all useless EBS volume snapshots or volumes.

Introduction to EBS snapshots

Amazon EBS Snapshots provide a simple and cost-effective way to back up data stored on Amazon EBS volumes. Here are some key points about EBS Snapshots:

Snapshots are incremental backups that store only the blocks on the disk that have changed after the most recent snapshot. This minimizes storage costs and the time required to create the snapshot.

Snapshots occur asynchronously. When you create a snapshot, it happens immediately but the status remains

pendinguntil the snapshot is complete. The EBS volume remains available during this time.You can create snapshots of both attached and detached EBS volumes. However, for a consistent backup, it is recommended to unmount the volume first before taking the snapshot.

Snapshots of encrypted EBS volumes are automatically encrypted. Volumes created from encrypted snapshots are also automatically encrypted.

You can create multi-volume snapshots across multiple EBS volumes attached to an EC2 instance. This allows for crash-consistent backups of applications that span multiple volumes.

EBS Snapshots integrate with AWS Data Lifecycle Manager to automate snapshot management and lifecycle policies. This helps reduce costs by automatically deleting old snapshots.

Snapshots provide several benefits like disaster recovery, data migration across regions/accounts, and improved backup compliance.

EBS Snapshots are stored in Amazon S3 and offer 99.999999999% durability. The Recycle Bin feature also allows the recovery of accidentally deleted snapshots.

Snapshot storage costs are based on the amount of data stored, not the size of the original volumes. You can use the EBS Snapshots Archive tier for long-term retention of snapshots at a lower cost.

In summary, EBS Snapshots provide an easy and cost-effective way to back up your block storage data on Amazon EBS volumes. The integration with services like DLM and S3 helps make snapshot management simple and secure.

You can also verify the timestamp like if you set the time for 6 months and now if any snapshot is older than 6 months it will be deleted by itself.

To better understand it we will go through a demo in which we will create an EC2 instance inside which we will add some volume and after that, we will filter it out using Lambda Function.

DEMO

Creating EC2 Instance

Firstly we will create an ec2 instance inside which there will be volume attached.

Login to your AWS account.

Now, Navigate to the EC2 instance and then click "Launch Instance".

Name: test-co

Number of Instances: 1

Application and OS image: Ubuntu

Instance type: t2.micro

Key pair: create a new one or use the existing one

Keep the rest of the things as default and click on "Launch Instance"

Here you see one ec2 instance will be created and one volume also gets created and now we will create snapshots of that volume (snapshots are nothing but a copy of the volume so that if our data got deleted or removed we can take backup using snapshots, you can assume it as image of the volume)

Creating Snapshots

again Navigate to EC2 -> snapshots

Click on "Create snapshot"

Resource type: Volume

Volume ID: Select the volume of the EC2 instance that you have created just now

Click on Create snapshot

Now what if the developer deleted the ec2 instance because it is of no use but forgot to delete the snapshot and he has around 100 snapshots in that he will be charged money for that snapshot which is of no use?

now to solve this problem we will write a Lambda function which will keep a check that if there are any useless snapshots it will automatically delete them.

Creating Lambda Function

Now, we will write our Lambda Function:

- Login to your AWS account and search for "Lambda".

Now, click on "Create function".

Now, you will see three options for how you want to write your code

Author from scratchStart (with a simple Hello World example)

Use a blueprint 9Build a Lambda application from sample code and configuration presets for common use cases)

Container image (Select a container image to deploy for your function)

Note: For learning purposes, we will select "Author from scratch start"

Function Name: test-co

Runtime: python 3.10

Note: you can use any language like Node.js, Ruby and Python.

Keep the rest of the things as default and click on "Create function".

Now, if you scroll down you will "CODE"

Paste the following code inside the code source

import boto3

def lambda_handler(event, context):

ec2 = boto3.client('ec2')

# Get all EBS snapshots

response = ec2.describe_snapshots(OwnerIds=['self'])

# Get all active EC2 instance IDs

instances_response = ec2.describe_instances(Filters=[{'Name': 'instance-state-name', 'Values': ['running']}])

active_instance_ids = set()

for reservation in instances_response['Reservations']:

for instance in reservation['Instances']:

active_instance_ids.add(instance['InstanceId'])

# Iterate through each snapshot and delete if it's not attached to any volume or the volume is not attached to a running instance

for snapshot in response['Snapshots']:

snapshot_id = snapshot['SnapshotId']

volume_id = snapshot.get('VolumeId')

if not volume_id:

# Delete the snapshot if it's not attached to any volume

ec2.delete_snapshot(SnapshotId=snapshot_id)

print(f"Deleted EBS snapshot {snapshot_id} as it was not attached to any volume.")

else:

# Check if the volume still exists

try:

volume_response = ec2.describe_volumes(VolumeIds=[volume_id])

if not volume_response['Volumes'][0]['Attachments']:

ec2.delete_snapshot(SnapshotId=snapshot_id)

print(f"Deleted EBS snapshot {snapshot_id} as it was taken from a volume not attached to any running instance.")

except ec2.exceptions.ClientError as e:

if e.response['Error']['Code'] == 'InvalidVolume.NotFound':

# The volume associated with the snapshot is not found (it might have been deleted)

ec2.delete_snapshot(SnapshotId=snapshot_id)

print(f"Deleted EBS snapshot {snapshot_id} as its associated volume was not found.")

- Here we have imported the Boto 3 module because its documentation is very good

Now, click on Deploy and then click on Test.

you will be redirected to the "configure test event" Here you need to create a new event

Test event action: Create new event

Event name: test

Event sharing settings: private

Keep the rest of the things as default and save the event.

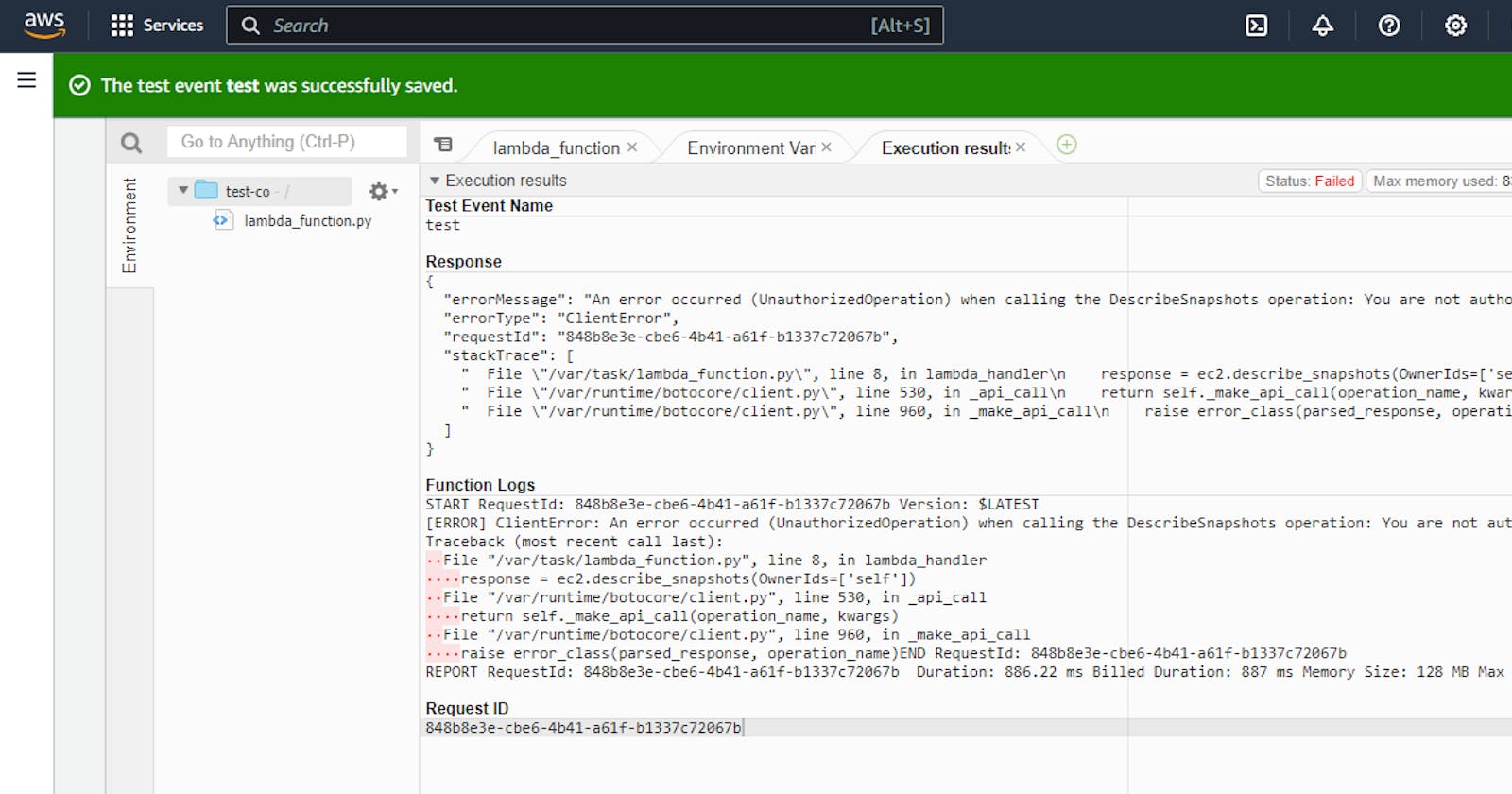

Now again click on test.

Here you will be introduced to an error because the configuration time is 3 sec but the execution timing is than 3 sec

hence to increase the configuration timing we need to navigate to

Configuration -> General Configuration -> click on Edit

Now scroll down and search for Timeout and increase by 10 sec.

Now, we also need to add a Role that will give us permission to access the ec2 instances and manage EBS snapshots.

For demo purposes, we will give FullAccess to the role but for the Enterprise level you need to add specific permissions for EBS snapshots.

Now, if you again click on test you will see that the snapshot of the volume that you have been deleted is now deleted.

That's a wrap................................